Google Gemini AI Photo Editing: Features & Strategy

⚡ Quick Take

Google isn't just launching an AI photo editor; it's strategically embedding generative image capabilities across its entire ecosystem, from casual consumer apps to powerful developer APIs. The move signals a shift from standalone editing tools to a future where image creation and manipulation are conversational, integrated, and highly fragmented, creating both opportunity and complexity for creators and builders.

Summary

Google has rolled out native, AI-powered photo editing within its Gemini ecosystem. This allows users to modify both uploaded and generated images using conversational prompts, enabling features like background replacement, object manipulation, and "multi-turn editing" for iterative refinement. It's the kind of seamless integration that makes you wonder—how much easier could creative work get?

What happened

The new capabilities are being deployed across multiple surfaces: directly in the Gemini mobile app for consumers, as an integrated "Ask Photos" feature on Pixel devices, and via developer-focused APIs in Google Cloud's Vertex AI and the Firebase AI Logic SDKs. This isn't a single product launch but a multi-pronged distribution strategy, spreading the tech far and wide, really.

Why it matters now

This move challenges the dominance of specialized tools like Adobe Photoshop and Midjourney by making advanced editing accessible within a conversational interface. It represents a major push to integrate generative AI directly into user workflows, treating image editing as a function of a broader AI assistant rather than a destination application. But here's the thing: in a world where everyone's got an idea but not the tools, this could level the playing field overnight.

Who is most affected

Developers, creators, and enterprises are the key groups. Creators gain powerful, prompt-based tools for rapid content generation. Developers and enterprises now have access to robust APIs (like Gemini 2.5 Flash Image on Vertex AI) to build custom applications, but must navigate a complex landscape of varying feature availability across platforms. From what I've seen in similar rollouts, it's those building the apps who'll feel the ripple effects most keenly.

The under-reported angle

The real story is the strategic fragmentation of Gemini's image features. What a consumer can do in the Gemini app is different from what a developer can achieve via the Vertex AI API, which offers more precise control, masking, and access to the latest models. This creates a tiered system of access and capability, forcing power users and developers to choose the right entry point for their specific needs, from simple edits to building scalable, AI-powered media workflows - a nuance that often gets lost in the hype.

🧠 Deep Dive

Have you ever stared at a photo, wishing you could tweak it effortlessly without firing up a full-blown editor? Google's rollout of Gemini-powered photo editing feels like that wish granted on a massive scale, marking a significant step in the commoditization of generative AI. By embedding these tools directly into the Gemini app, Google is positioning advanced image manipulation not as a professional skill, but as a simple conversational task. For millions of users, the pain point of navigating complex software like Photoshop is replaced by a simple prompt: "change the background to a sunny beach" or "remove the person on the left." This approach, termed "multi-turn editing," allows for an iterative dialogue, making the creative process feel more like a collaboration with an assistant than a session in a technical tool - collaborative, almost intuitive.

That said, the consumer app is just the tip of the iceberg. The true ambition of Google's strategy is revealed in its parallel deployment across its developer ecosystem. Through the Vertex AI and Firebase SDKs, Google is exposing the engine room of its image models, including Gemini 2.5 Flash. This developer-first layer offers far more granular control, including mask-based inpainting, support for generating images of people, and access to character consistency features. It's a bifurcated approach that serves two distinct markets: simplified, "good enough" editing for the masses, and powerful, API-driven building blocks for developers creating the next generation of AI-native applications. Weighing the upsides, you can't help but think this dual track is clever - keeping the masses hooked while empowering the innovators.

This multi-platform strategy creates an immediate and critical challenge: navigating the feature fragmentation. An analysis of Google's own documentation reveals a capability gap between the consumer-facing Gemini app, the on-device Pixel experience, and the full-featured Vertex AI endpoint. For instance, advanced reproducibility controls, specific model versions, and precise masking are primarily API-level features. This means a creator attempting to build a consistent, professional workflow must piece together a pipeline that might start in one app but rely on API calls for refinement and upscaling, a complexity that current coverage overlooks - and one that, frankly, could trip up even seasoned pros if they're not careful.

Ultimately, this strategy is about ecosystem dominance. By providing both easy-to-use front-end tools and powerful back-end APIs, Google is attempting to capture the entire value chain of generative media. The inclusion of SynthID for digital watermarking underscores this ambition, signaling an attempt to build a scalable and "responsible" infrastructure for AI-generated content. While competitors like Adobe integrate Firefly into their creative suite, Google is weaving Gemini into the fabric of its entire cloud and consumer universe, betting that the future of creativity is not in a single app, but in an ambient, multi-surface intelligence layer. It's a bold play, one that leaves you pondering just how sticky this web will become.

📊 Stakeholders & Impact

Ever feel like tech rollouts hit different groups in wildly different ways? Google’s fragmented rollout impacts different users in distinct ways - some get a gentle nudge, others a full overhaul. The following table breaks down the feature landscape across key platforms.

Platform / Service | Target Audience | Key Features & Limitations |

|---|---|---|

Gemini App | Consumers, Casual Creators | Conversational edits, simple prompt-based generation. Easy to use but lacks precision control and advanced features like masking. |

Pixel "Ask Photos" | Pixel Phone Users | Seamless, on-device conversational editing integrated with Google Photos. Optimized for mobile, privacy-centric workflows. |

Vertex AI API | Developers, Enterprises | Full power: mask-based editing, people generation, character consistency, fine-grained parameter control. Requires technical expertise. |

Firebase AI Logic SDKs | App Developers (Web/Mobile) | Simplified SDKs for integrating Gemini image logic into applications. A bridge between ease-of-use and full API power. |

✍️ About the analysis

This analysis is an independent i10x synthesis based on official Google product announcements, developer documentation for Vertex AI and Firebase, and hands-on reviews. It is written for developers, product managers, and strategists seeking to understand the architectural and market implications of Google's AI strategy - pieced together from the ground up, with an eye on what really moves the needle.

🔭 i10x Perspective

What if the scattered pieces of this puzzle are actually the master plan? Google's tiered approach to image generation is not a flaw; it's a strategic playbook for ecosystem capture. By offering accessible tools for consumers while reserving the most powerful capabilities for developers via APIs, Google is commoditizing basic generative editing and simultaneously building a deep, defensible moat around its AI infrastructure. Plenty of reasons to watch this closely, it seems.

This puts immense pressure on both self-contained tools like Midjourney and integrated incumbents like Adobe. The next battle isn't just about model quality—it's about the accessibility and composability of AI building blocks. The critical unresolved tension is whether this fragmentation will ultimately empower a new wave of AI-native applications or create a confusing, disjointed user experience that hinders mass adoption of advanced creative workflows. How Google unifies—or fails to unify—this powerful but scattered ecosystem will define its success in the generative media race, and that's the part that keeps me up at night, thinking about the long game.

Ähnliche Nachrichten

Grok Imagine Enhances AI Image Editing | xAI Update

xAI's Grok Imagine expands beyond generation with in-painting, face restoration, and artifact removal features, streamlining workflows for creators. Discover how this challenges Adobe and Topaz Labs in the AI media race. Explore the deep dive.

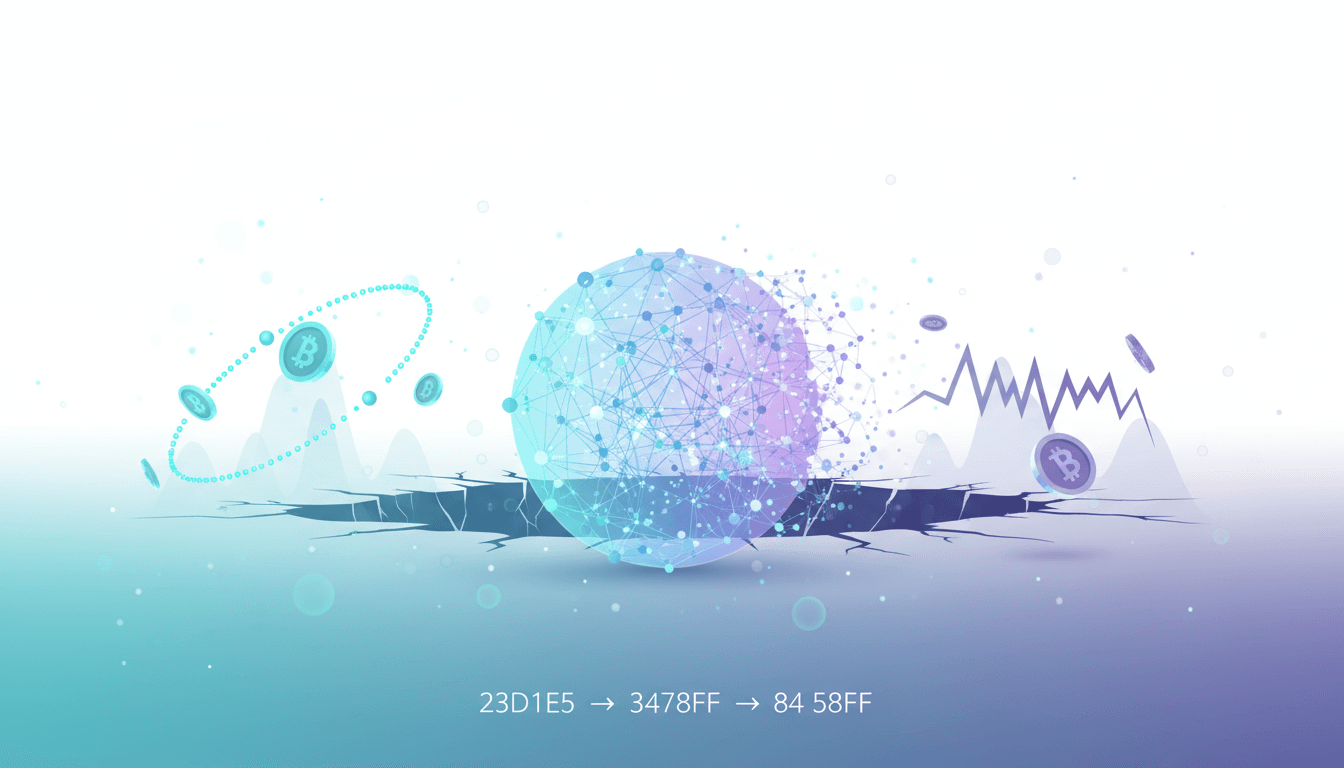

AI Crypto Trading Bots: Hype vs. Reality

Explore the surge of AI crypto trading bots promising automated profits, but uncover the risks, regulatory warnings, and LLM underperformance. Gain insights into real performance and future trends for informed trading decisions. Discover the evidence-based analysis.

xAI Ani: Biometric Data Ethics in AI Companion Launch

xAI's Ani, a 3D AI companion powered by Grok 4, promises affectionate interactions but faces backlash over alleged employee biometric data coercion. Delve into the privacy risks, legal challenges under BIPA and GDPR, and impacts on AI ethics. Explore the analysis.